How to Prioritize Feature Requests (Without Going Crazy)

Learn practical frameworks to prioritize feature requests. Stop building the wrong things and focus on what actually matters to customers and business.

You have 200 feature requests. You can build 10 this quarter.

How do you choose?

This is the defining challenge of product management. Get it right, and you ship things customers love. Get it wrong, and you waste months on features nobody uses.

This guide gives you practical frameworks to prioritize features—without drowning in spreadsheets or endless debates.

Why Prioritization Is Hard

The Stakeholder Problem

Everyone has opinions:

- Sales wants the feature that closes the deal

- Support wants the fix that reduces tickets

- Engineering wants the refactor that reduces debt

- Customers want their specific request

- Leadership wants the strategic initiative

Everyone's right. Everyone's also partly wrong.

The Data Problem

Prioritization needs data, but:

- Request counts are unreliable (loud minority bias)

- Revenue correlation is unclear

- Impact estimates are guesses

- Effort estimates are wrong

You're making high-stakes decisions with low-confidence information.

The Emotion Problem

Some requests feel important because:

- A big customer yelled loudly

- The CEO had an idea in the shower

- A competitor just launched it

- An engineer is excited about the tech

Feelings aren't data.

The Meta-Framework

Before diving into specific frameworks, understand this:

Step 1: Define Your Lens

What matters most right now?

| Goal | Prioritize For | |------|----------------| | User acquisition | Features that attract new users | | Retention | Features that keep users coming back | | Revenue | Features that enterprise customers pay for | | Efficiency | Features that reduce support/ops burden | | Platform | Features that enable future features |

Pick one. Maybe two. Not five.

Step 2: Filter First

Not everything deserves detailed prioritization:

Auto-include:

- Critical bugs (breaking core functionality)

- Security issues

- Legal/compliance requirements

Auto-exclude:

- Single-customer requests (unless strategic)

- Requests that contradict product vision

- Features competitors have that don't fit your strategy

Actually prioritize: Everything else.

Step 3: Score and Stack

Apply a framework to your filtered list. Compare. Decide.

Step 4: Revisit

Priorities change. Re-prioritize monthly or quarterly.

Framework 1: RICE (Most Popular)

What It Is

RICE scores features on four dimensions:

- Reach: How many users will this affect per time period?

- Impact: How much will it affect them? (Scale: 0.25, 0.5, 1, 2, 3)

- Confidence: How sure are we about estimates? (%)

- Effort: How many person-weeks to build?

Formula: RICE = (Reach × Impact × Confidence) / Effort

Example

| Feature | Reach | Impact | Confidence | Effort | RICE Score | |---------|-------|--------|------------|--------|------------| | Dark mode | 5000/mo | 0.5 | 80% | 2 weeks | 1000 | | SSO | 500/mo | 3 | 90% | 4 weeks | 337 | | Export API | 200/mo | 2 | 70% | 6 weeks | 47 |

Dark mode wins—high reach, low effort.

When to Use

- You have reasonable data on reach

- You can estimate effort with moderate confidence

- You need to compare many features objectively

When to Avoid

- Very early stage (no usage data)

- Features are wildly different in type

- Strategic initiatives that don't fit the formula

Tips

- Be honest about confidence (it's usually lower than you think)

- Use same person/team for effort estimates (consistency)

- Impact scale: 3 = massive, 2 = high, 1 = medium, 0.5 = low, 0.25 = minimal

Framework 2: ICE (Simpler)

What It Is

ICE scores features on three dimensions, each 1-10:

- Impact: How much value does this create?

- Confidence: How confident are we in the estimate?

- Ease: How easy is this to build? (inverse of effort)

Formula: ICE = (Impact + Confidence + Ease) / 3 or just sum them

Example

| Feature | Impact | Confidence | Ease | ICE Score | |---------|--------|------------|------|-----------| | Dark mode | 5 | 8 | 9 | 7.3 | | SSO | 9 | 7 | 4 | 6.7 | | Export API | 6 | 5 | 3 | 4.7 |

When to Use

- Quick prioritization needed

- Limited data available

- Smaller backlogs

- Team is new to frameworks

When to Avoid

- Large backlogs needing fine distinctions

- Stakeholders want "objective" scoring

- Decisions are controversial

Framework 3: Value vs. Effort Matrix

What It Is

A 2×2 matrix that categorizes features:

High Value │ Quick Wins │ Big Bets

│ (Do First) │ (Plan Carefully)

───────────┼───────────────┼───────────────

Low Value │ Fill-Ins │ Time Sinks

│ (Maybe Later) │ (Avoid)

└───────────────┴───────────────

Low Effort High Effort

How to Use

- List all features

- Estimate value (1-10)

- Estimate effort (1-10)

- Plot on matrix

- Prioritize: Quick Wins → Big Bets → Fill-Ins → Never Time Sinks

When to Use

- Roadmap planning sessions

- Stakeholder alignment meetings

- Visual learners on the team

When to Avoid

- Features cluster in one quadrant

- Need more nuance than four buckets

Framework 4: Kano Model

What It Is

Categories features by customer satisfaction impact:

| Type | Definition | Effect | |------|------------|--------| | Must-Haves | Expected, dissatisfying if absent | No delight, but failure without | | Performance | More is better (linear satisfaction) | Direct satisfaction correlation | | Delighters | Unexpected, delightful if present | Creates advocates | | Indifferent | Customers don't care | No impact either way | | Reverse | Presence causes dissatisfaction | Actively harmful |

Example

For a project management tool:

- Must-Have: Create and assign tasks

- Performance: Speed, number of integrations

- Delighter: AI that predicts deadlines

- Indifferent: Customizable fonts

- Reverse: Forced gamification

When to Use

- Understanding customer expectations

- Deciding between basic vs. innovative features

- New product/market

When to Avoid

- Comparing similar features

- Need quantitative scores

Framework 5: Weighted Scoring

What It Is

Score features on multiple criteria with different weights:

| Criteria | Weight | Feature A | Feature B | Feature C | |----------|--------|-----------|-----------|-----------| | Revenue impact | 30% | 8 | 5 | 3 | | User requests | 25% | 6 | 9 | 4 | | Strategic fit | 25% | 7 | 6 | 9 | | Ease of build | 20% | 9 | 4 | 5 | | Weighted Score | | 7.35 | 6.05 | 5.25 |

How to Calculate

Score × Weight for each, then sum.

When to Use

- Multiple stakeholders with different priorities

- Need transparency in decision-making

- Complex trade-offs

When to Avoid

- Weights become political battles

- Analysis paralysis risk

Framework 6: MoSCoW

What It Is

Categorize features into:

- Must Have: Non-negotiable for this release

- Should Have: Important, but not critical

- Could Have: Nice to have, if time permits

- Won't Have: Explicitly out of scope

When to Use

- Sprint/release planning

- Fixed deadlines with flexible scope

- Stakeholder alignment

Example

For Q2 launch:

- Must: User authentication, core dashboard

- Should: Dark mode, export feature

- Could: Advanced filters, mobile optimization

- Won't: Multi-language support, offline mode

Handling Specific Scenarios

Scenario: "Big Customer Asked for This"

Don't auto-build. Evaluate:

- Is this request unique to them, or would others benefit?

- What's their revenue vs. cost to build?

- Would building this compromise the product for others?

Red flag: "If you build X, we'll buy." Often a bluff. And X becomes Y becomes Z.

Scenario: "Competitor Just Launched This"

Don't panic-build. Consider:

- Is their market the same as yours?

- Are your customers asking for it?

- Can you do it better, or just the same?

Better strategy: Focus on your differentiation, not feature parity.

Scenario: "CEO Wants This"

Don't auto-prioritize. Instead:

- Understand the underlying goal

- Share data on current priorities

- Propose trade-offs ("We can do this if we push back X")

Scenario: "Support Is Drowning"

Balance carefully:

- Calculate actual cost of support tickets

- Some fixes have huge ROI

- But don't become only reactive

Prioritization Ritual

Weekly

- Review newly captured requests (5 min)

- Quick ICE score for new items (10 min)

- No major decisions—just keep list updated

Monthly

- Review top 20 requests in detail

- Update RICE/ICE scores with new data

- Identify candidates for next quarter

Quarterly

- Full prioritization exercise

- Stakeholder input session

- Commit to roadmap items

Avoiding Common Mistakes

Mistake 1: Over-Engineering Prioritization

Spreadsheet with 15 criteria and weighted scores is probably overkill. Start simple.

Mistake 2: Prioritizing in Isolation

Involve engineering (for effort), support (for pain), sales (for revenue). PM shouldn't guess alone.

Mistake 3: Never Re-Prioritizing

Priorities change. What was #1 last quarter might be #10 now. Review regularly.

Mistake 4: Ignoring Effort

A high-value feature that takes 6 months might be worse than three medium-value features that take 2 months each.

Mistake 5: Skipping "Won't Do"

If you can't say no, your prioritization is meaningless. Every yes is an implicit no to something else.

Getting Stakeholder Buy-In

1. Share the Framework

Before prioritization, explain how decisions will be made. People accept outcomes better when they understand the process.

2. Make Trade-Offs Visible

Don't just say "we're building X." Say "we're building X, which means we're not building Y this quarter."

3. Show the Data

Whatever framework you use, share the scoring. Transparency reduces politics.

4. Create Feedback Loops

When shipped features succeed (or fail), share results. This builds trust in the process.

Tools That Help

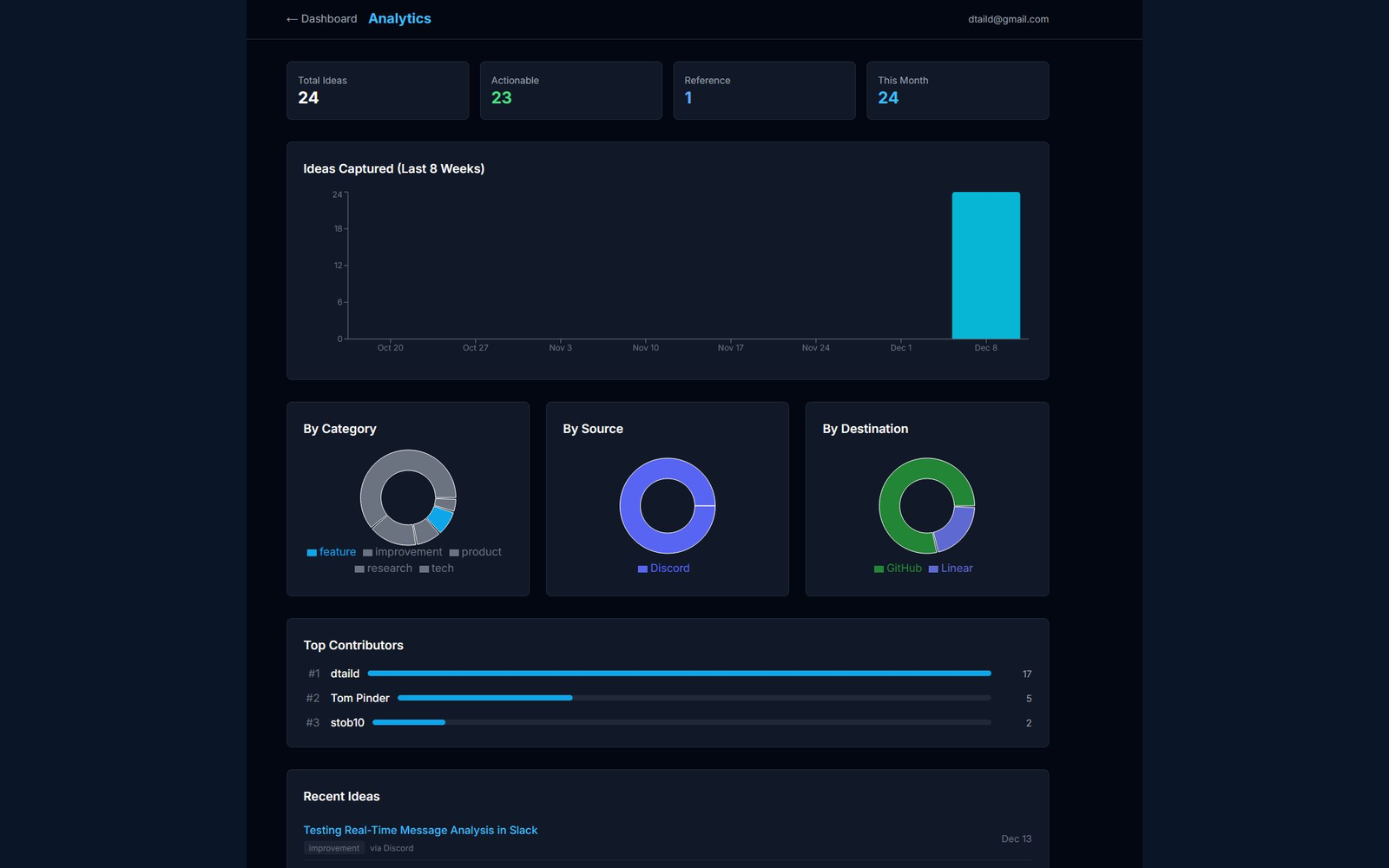

| Tool | Prioritization Feature | Best For | |------|------------------------|----------| | ProductBoard | Built-in scoring | Full PM workflow | | Airfocus | RICE, custom scoring | Prioritization-focused | | Notion | DIY scoring tables | Flexible/custom | | Canny | Vote counts | Customer-driven | | IdeaLift | Request aggregation + AI | Capture → Prioritize | | Airtable | Custom scoring models | Data-heavy teams |

Sample Prioritization Session

Prep (30 min before)

- Pull list of candidate features

- Pre-score with ICE/RICE

- Gather relevant data (request counts, revenue, etc.)

Session (60 min)

- Align on lens (5 min): "This quarter we're prioritizing for retention"

- Review auto-includes (5 min): Security issues, critical bugs

- Quick filter (10 min): Remove obvious no's

- Score discussion (30 min): Walk through top 15, adjust scores, debate

- Commit (10 min): Lock in top 5-8 for the quarter

Post-Session

- Document decisions and rationale

- Communicate to stakeholders

- Update roadmap tool

Conclusion

Prioritization isn't about finding the "right" answer. It's about making the best decision with incomplete information, transparently, and moving forward.

Start simple:

- Pick one framework (ICE is easiest to start)

- Score your top 20 requests

- Make decisions and ship

Iterate as you learn what works for your team.

The worst prioritization is no prioritization—building whatever feels urgent that week. Any framework beats that.

Ready to capture all the requests worth prioritizing? Try IdeaLift free →

Related posts:

- The Complete Guide to Product Feedback Management

- How to Track Feature Requests Without Losing Your Mind

- How to Close the Customer Feedback Loop

Ready to stop losing ideas?

Capture feedback from Slack, Discord, and Teams. Send it to Jira, GitHub, or Linear with one click.

Try IdeaLift Free →